Once again, life has taken on a different course than normal. This week is solely based on what’s happening in America and something I’ve become passionate about learning and uncovering. This week is about American History. No, not that watered-down and whitewashed half truth shoved down our throats in school. I’m speaking about what really happened within this nation that still has ramifications to this very day.

Initially I took what was told to me as truth. As a child, that’s what you’re taught. Why would someone knowingly deceive a child into believing something that’s false? I knew that books attempted to make light of slavery, but many of the defining moments in history portrayed Europeans as the protagonists of the story when that’s not accurate.

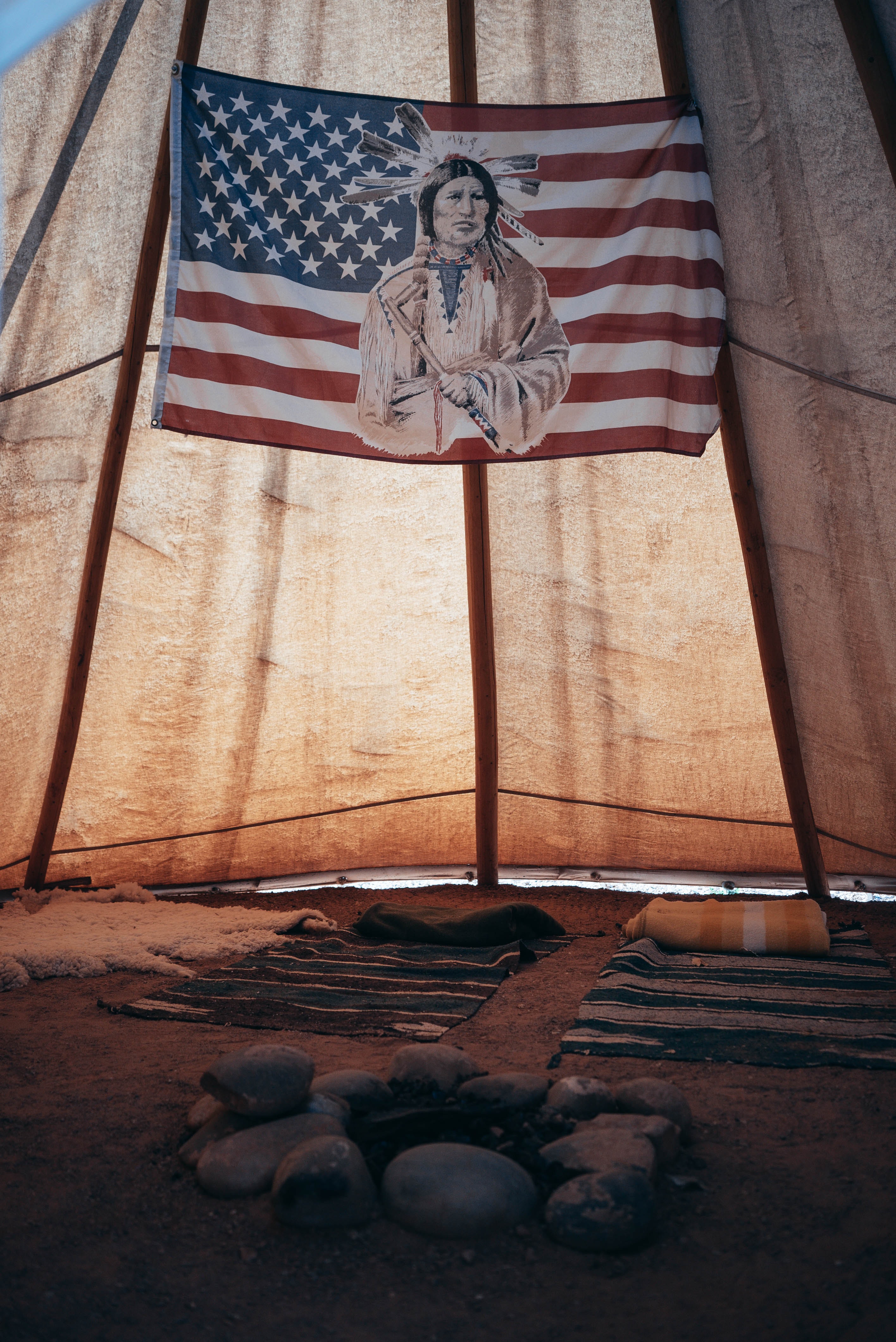

Reading is essential here!! I am the first person to admit that I don’t do enough reading in this area, but it was only because I thought it was boring. Now I’m finding out that it’s honestly just horrifying. As a kid, I remember we would call someone that gave you something and then wanted to take it back an Indian giver. I continued to say it out of ignorance, but recently I asked my husband why that was even a thing. If you peek into history you’d know that the Indians gave as a sign of friendship only to be taken advantage of, stolen from, and killed (by disease or decision). This is makes me sad. And the idea of pushing them onto reservations because their lands were stolen from them (We have to admit this because it’s tiring acting as if lands were just “discovered”), is by no means compensation for what was taken. Sacred ancestral lands are still having to be fought for because the government cares more about raping the land of resources than respecting it. This is just one instance of an ethnic group experiencing horrible treatment.

So here’s my connection to where we currently are: We were lied to believe in something that didn’t exist. Once we admit this fact, we can move to correct the wrongs. Nothing signed at the beginning of America was designed to include/promote anyone other than White men. White women were able to come along because men needed them. It’s no secret of the horrible practices that were instituted to sideline Black Americans since the ending of slavery. If you aren’t sure what I’m talking about please go find books related to this subject (usually I’m game to throw out so many book titles, but everyone has access to Google so if you really want to know you’ll search for it). There’s so much false narratives believed to be true, that it has tainted our current society. Acknowledgement is the only way we get better from here. For example, the Civil War has some foundational footing in slavery (I don’t care if your family fought for the South...it is what it is). Once again, reading for information without all the emotional connection would serve many people well to prove that they’ve believed wrong. I don’t expect everyone to change their mind, but sometimes just encountering different information can product a better result for future generations. Systems like redlining and requiring green books were established to keep Black Americans “in their place”, but we are still wanted to say the Pledge of Allegiance that speaks of equality and unity. At some point, the irony gets old. Songs created about this great land were made by White men for White men....can we just go ahead and quit skirting around this so we can heal and move on. We shouldn’t be arguing about this anymore... there’s plenty of evidence, but we don’t have to stay here!!! We will never move on if someone doesn’t start admitting that our American history is one of murder, thievery, and lies. Books need to quit attempting to portray a picture of peaceful encounters and just a pure desire to make life better for everyone. If we could just tell the truth, we could be more impactful in making the necessary changes. But, we won’t read. We would rather watch a news channel for information (which is biased based on political affliction) instead of forming opinions and thoughts based on facts that are presented in context appropriately. It’s all about entertainment instead of true encounters that produce internal change with inevitably aids in creating social change. We the people can do this, but it takes each of us being honest and bringing truths to the table and not traditions.

Is there anything you’ve learned in regards to history that has changed your perspective?

Comment

Wxkgti veusob

buy albuterol cheap <a href="https://albutolin.com/">albuterol price</a> order ventolin 4mg for sale

Fklmvg keimiw

order ventolin 4mg inhaler <a href="https://albutolin.com/">order albuterol 4mg inhaler</a> cheap ventolin 2mg

Otancf qufuwa

order albuterol generic <a href="https://albutolin.com/">buy ventolin no prescription</a> order albuterol inhalator generic

Wwqvgq wrwbll

buy albuterol pills <a href="https://albutolin.com/">ventolin 2mg usa</a> albuterol usa

Nqlotw eaytex

order generic ventolin <a href="https://albutolin.com/">order albuterol for sale</a> albuterol 2mg generic

Vuhwnn eayhps

buy generic ventolin <a href="https://albutolin.com/">albuterol 4mg usa</a> albuterol for sale online

Lhrttc nztisz

order albuterol 2mg pills <a href="https://albutolin.com/">purchase ventolin inhalator sale</a> order ventolin 2mg without prescription

Aaufsv xwzmjw

ventolin price <a href="https://albutolin.com/">order ventolin generic</a> buy generic albuterol

Xedkbl infmos

order albuterol 4mg generic <a href="https://albutolin.com/">ventolin over the counter</a> purchase albuterol pills

Yraqbh saadzm

purchase albuterol <a href="https://albutolin.com/">buy cheap generic albuterol</a> best antihistamine for runny nose

Xhyhgk gjdids

ventolin inhalator for sale online <a href="https://albutolin.com/">purchase albuterol inhalator online</a> order albuterol inhalator for sale

Rydplx bgtaey

buy albuterol 4mg for sale <a href="https://albutolin.com/">order albuterol 4mg without prescription</a> albuterol without prescription

Dzfots auamvj

purchase ventolin pills <a href="https://albutolin.com/">ventolin inhaler</a> order ventolin pills

Xnhomu inuwsq

ventolin online buy <a href="https://albutolin.com/">best antihistamine for itchy skin</a> albuterol 4mg ca

Vksiwe vrubmd

ventolin tablet <a href="https://albutolin.com/">purchase albuterol inhalator without prescription</a> purchase albuterol online cheap

Oigpub dnasxj

albuterol inhalator price <a href="https://albutolin.com/">oral ventolin 2mg</a> buy ventolin 4mg generic

Qaeabe apatjv

order albuterol 2mg online <a href="https://albutolin.com/">buy allergy pills onlin</a> albuterol sale

Brcmbx bjthth

ventolin pills <a href="https://albutolin.com/">albuterol inhalator over the counter</a> buy albuterol inhalator sale

Yqxsuu xsxonz

order albuterol 4mg for sale <a href="https://albutolin.com/">purchase albuterol generic</a> purchase ventolin inhalator generic

Sfejco tgddfx

buy albuterol inhalator sale <a href="https://albutolin.com/">ventolin online buy</a> ventolin inhalator generic

Xtdpsc vkcnup

albuterol 4mg tablet <a href="https://albutolin.com/">order albuterol 2mg sale</a> buy albuterol 2mg

Dywzvi hskwua

albuterol over the counter <a href="https://albutolin.com/">order albuterol inhalator generic</a> buy ventolin 2mg without prescription

Nfasmw udhocc

order albuterol generic <a href="https://albutolin.com/">albuterol drug</a> brand ventolin inhalator

Ezaawa vkxbkl

buy albuterol 4mg online <a href="https://albutolin.com/">albuterol pills</a> buy generic albuterol over the counter

Sdoutj bduecp

albuterol online <a href="https://albutolin.com/">ventolin sale</a> buy ventolin generic

Htiupb jzaafv

albuterol without prescription <a href="https://albutolin.com/">buy albuterol 4mg for sale</a> purchase albuterol sale

Yqvmqe fuwtkp

ventolin 2mg price <a href="https://albutolin.com/">albuterol 4mg canada</a> order ventolin inhalator online cheap

Eogqfe vugqfh

albuterol medication <a href="https://albutolin.com/">ventolin inhalator for sale online</a> cheap albuterol

Lvjfwz zmrjno

albuterol usa <a href="https://albutolin.com/">purchase ventolin pills</a> purchase albuterol inhalator online

Biacur mqxnrf

ventolin 4mg for sale <a href="https://albutolin.com/">ventolin tablet</a> buy generic albuterol

Uzilau qwpypd

generic albuterol <a href="https://albutolin.com/">albuterol medication</a> albuterol uk

Jdyknj ixwwkw

albuterol medication <a href="https://albutolin.com/">order generic albuterol</a> albuterol 4mg brand

Hjoqck ldkcib

albuterol drug <a href="https://albutolin.com/">ventolin 2mg oral</a> order ventolin inhalator generic

Sxdlat klizyw

buy ventolin online cheap <a href="https://albutolin.com/">order albuterol 4mg online cheap</a> order albuterol pills

Zmztbq uycybo

buy albuterol pills <a href="https://albutolin.com/">oral ventolin 2mg</a> albuterol inhalator generic

Yupwyt vejxtp

albuterol 2mg without prescription <a href="https://albutolin.com/">albuterol online buy</a> oral ventolin 2mg

Mqaizg lubfbk

order albuterol inhaler <a href="https://albutolin.com/">buy albuterol inhalator generic</a> ventolin inhaler

Ghfskv wvcgqn

buy ventolin 2mg generic <a href="https://albutolin.com/">order allergy pills</a> purchase albuterol online cheap

Xdhexx pumxqc

ventolin inhaler <a href="https://albutolin.com/">buy ventolin 2mg generic</a> ventolin oral

Efaxuk lacabm

ventolin inhalator buy online <a href="https://albutolin.com/">purchase ventolin inhalator sale</a> best allergy medicine for adults

Tclxqk yksfkr

order albuterol 2mg for sale <a href="https://albutolin.com/">buy albuterol online cheap</a> generic albuterol inhalator

Vdamto fcddrm

albuterol canada <a href="https://albutolin.com/">albuterol over the counter</a> ventolin 4mg brand

Hycmjp gwhsif

albuterol oral <a href="https://albutolin.com/">ventolin inhalator drug</a> ventolin 2mg pills

Kcunsa rmgafv

buy albuterol 4mg pills <a href="https://albutolin.com/">albuterol ca</a> order ventolin without prescription

Rechrh ggqgva

buy albuterol 2mg <a href="https://albutolin.com/">ventolin 4mg price</a> order albuterol 2mg generic

Tzqxiq kyvdif

generic albuterol <a href="https://albutolin.com/">order albuterol 4mg generic</a> albuterol inhaler

Tusnkf wwqzxu

buy albuterol 2mg <a href="https://albutolin.com/">buy albuterol inhalator for sale</a> order albuterol 4mg pill

Drswkn sycmzi

ventolin drug <a href="https://albutolin.com/">where can i buy ventolin</a> order albuterol 4mg online cheap

Qjjoxb yzobte

purchase albuterol without prescription <a href="https://albutolin.com/">buy albuterol for sale</a> buy ventolin inhalator for sale

Ogwleh tvvaes

generic ventolin 4mg <a href="https://albutolin.com/">albuterol medication</a> order albuterol online